Will A.I. save us from Climate Change or make it worse?

As we navigate the Third Golden Age of A.I., there is a great deal of both hope and dread about its impact on the climate. Optimisation vs energy demands, efficiency vs emissions. Where will it lead?

For this article, I’m delighted to be joined by my good friend and co-author John MacIntyre, Professor of Artificial Intelligence, Chancellor of the University of the Commonwealth of the Caribbean (UCC), and Editor of both the International Journals of A.I. and Ethics and Neural Computing and Applications. John and I collaborated and co-authored a lot of papers in the 1990s and its a great pleasure to work with him again.

The third golden age of A.I.

We all now live in the so-called ‘Third Golden Age’ of Artificial Intelligence. A.I. has been around a long time – in fact since WW2, when the first computer model of a single human brain cell (a neuron) was created by Warren McCulloch and Walter Pitts. Through the 1960s and 1970s A.I. developed but then entered a ‘dark age’ in which there seemed no useful applications of this research. Then in the 1980s the ‘Second Golden Age’ spawned from more complex artificial neural network models, with hundreds of artificial neurons connected in ways that allowed them to model more complex data and solve more complex problems. Back Propagation training was developed, which is still used extensively today. Soon, though, the over-hype and under-delivery of these complex, mathematically dense models led to a second ‘dark age’ where A.I. was seen again as the province of lab-coat boffins and mad professors. And then the ‘Third Golden Age’ ignited with the development of Generative A.I., tools which can generate new responses to instructions and questions which anyone can give them.

When OpenAI released ChatGPT in November 2022 the world changed forever. No longer was Artificial Intelligence the domain of technology experts and computer scientists. Generative A.I. had arrived and was now in the hands of the public at large. The intuitive design and surprising fluency made it an instant hit, attracting over 1 million users within five days. By early 2023, it had become a household name, prompting widespread experimentation across education, business, and creative industries. Generative A.I. has become the disruptive technology of its time, arguably as disruptive as electricity or the internet in previous ages.

Even though most people have now at least heard of the term “A.I.” many don’t really know what that means, and hardly anyone is aware that the field of A.I. is much more than Generative A.I. tools like ChatGPT, Claude, Gemini, Llama, and many more. For every large Generative A.I. tool in use, there are hundreds or thousands of smaller models consisting of only a few thousand neurons, but trained on specific focussed tasks with a tiny fraction of the computing and energy demands.

A.I. is everywhere, and it is affecting your life every day, whether you realise it or not. A.I. is so deeply embedded in society that many businesses and daily activities depend on some form of A.I. tech, and it is often being used without the knowledge or consent of customers. Of course, A.I. excels in tasks like language, vision, and prediction—and its future promises more personalised, multimodal, and autonomous systems across nearly every domain. A.I. models outperform humans in tasks like image classification, visual reasoning, and English comprehension. Most of the recent drive in A.I. has come from private companies like OpenAI, Google, and Meta, with Elon Musk giving the world Grok as part of his contribution to society.

Climate Change background

Advances in A.I. and the costs of their development have to be set against the background context of our changing climate. They promise to deliver insight, even solutions but also a cost.

The latest observational data show that global warming is accelerating, bringing increasing and long term changes which are driving ever more extreme weather events. It is also categorically known that human greenhouse gas emissions, from the burning of fossil fuels, material processing (cement and steel production) and from land use changes are the cause of these changes.

The recent accelerated warming adds to the alarm among scientists as it shows that a transition has occurred, whereby it is not just the greenhouse gases that are directly trapping heat, but feedback mechanisms are now engaging, reducing the Earth’s ability to sequester our emissions and accelerating the energy accumulation within the climate system.

Inertia in the system means that we are heading for much higher temperatures than the 1.5ºC Paris target, which will probably be breached in 2026. Unless the world rapidly decarbonises, eliminating fossil fuel use, adopts zero emission agriculture and halts land use changes, the 2ºC upper target will be breached in the late 2030s and a 3ºC world is entirely possible by 20501. This will bring an equivalent accelerated worsening of impacts including storms, fires, droughts, heatwaves and sea level rise.

What effects will A.I. have on our carbon emissions and how can A.I. help us tackle the climate crisis?

A.I. Energy and resource use

Looking at the costs first. Apart from the resources used in the building of data centres for A.I., including the short life expectancy of the processors, the two main concerns from a climate perspective are energy demand and water usage. Energy demand is significant. A.I. servers running hundreds of thousands of processors are very energy hungry and since all that energy is converted to heat, cooling is expensive too. Over 40% of the energy used by a data centre is used to cool the racks of processors.

Electrical energy is needed both during the training and in the utilisation of A.I. models. One estimate has the recent split as 40% training, 60% utility during a typical large language model’s lifetime. This is already changing in favour of utility as usage expands exponentially. For example, earlier Google models were used on demand, whereas now they are used by virtually every search, massively increasing their utility per model developed. As applications penetrate more and more markets, both tailoring and utility will grow very rapidly. On top of an increase in the number of models trained and their complexity, this will drive the huge expansion expected over the coming years and decades.

Energy demand

According to the IEA, data centres accounted for 1.5% of the world’s electricity consumption in 2024 at 415 terawatt-hours. They project a doubling of this by 2030 to 945 TWh, and to 1,200 TWh by 2035.2

The scale of the proposed new data centres is just staggering. One proposed data centre in Alabama requires 1,200MW of power, but OpenAI are proposing up to ten Stargate data centres, each requiring 5,000MW. If they want facilities on this scale, so will the other 4 players in the US alone. Apple have announced plans to spend $500 billion on manufacturing and data centres in the US over the next 4 years and Google budgeted $75 billion for A.I. to be spent in 2025 alone.

To put these numbers in context, the Hinkley Point C nuclear power station currently under construction in the UK will produce 3,200MW, 7% of UK demand. Hinkley C will end up costing £40 billion and won’t be fully operational until 2034 (assuming no further delays). And yet it wouldn’t be big enough to power one Stargate data centre, just 64% in fact. These amounts are huge, but they are also concentrated in just one place. The power industry, even in the US, is simply not at a scale to be able to handle this, especially over a 5 to 10 year time horizon. This according to the CEO of Constellation, the largest publicly traded power company in the world, who discussed the issue at NY Climate Week in September.

The pace of demand growth

Its not just the overall growth that is an issue for electricity suppliers, its the pace of the growth. In the US, electricity demand growth has been flat for the last 15 years or more. This is helpful from a grid management and development perspective and importantly, it also allows new renewable electricity sources to provide the required growth and start to replace legacy fossil based energy. Electricity demand growth has been forecast as high as 25% by 2030 and 78% by 2050 due to A.I. expansion. This leads to several supply issues that the industry is not prepared for. The implications include higher electricity prices for everyone, a slowing of the energy transition with increased emissions, and more energy wastage.

Increasing the demand for electricity pushes up prices for all users. The IMF estimate electricity price increases of 8.6% due to data centre growth. Increasing prices maintain a high market value on fossil fuels, even highly inefficient fuels such as shale gas and tar sand extracted oil can maintain profitability. Without data centre growth, and with renewables now being cheaper than any other form of generation in history (without tariffs or subsidy) these dirty options would become uneconomic and abandoned in the near future, assisting in the energy transition.

Higher demand of the finite electrical supply drives up the cost of energy across the entire grid, especially in regions with high data centre growth. This means that older, less efficient fossil plants which were becoming unprofitable, become profitable again and will continue to burn fossil fuels and continue to emit greenhouse gases. Analysis by MIT Technology Review shows that data centres in the US use electricity that is 48% more carbon intensive than the national average3. The IMF analysis of A.I. impacts on energy use and emissions for 2026-2030 suggests US emissions will increase by 5.5% and global emissions by 1.2% due to A.I. growth (roughly the cumulative energy emissions of Italy over a 5 year period).

The high pace of demand growth also means all new-build power options can make money, despite their technology, efficiency, pollution level or cost. It then becomes beneficial to build what can be built quickly and easily, not how much the long term cost of the energy will be, either environmentally or fiscally. Although wind and solar are cheaper and faster than anything else, this still provides some benefit to installing gas powered turbine generators as has been the case with Elon Musk’s Colossus A.I. supercomputer in Memphis which has 35 unlicensed gas turbines. OpenAI is also likely to go down the gas route for their in-house energy having appointed a pro-gas former Trump energy official as their new Energy Chief.

In the US in particular this pace allows recent regressive political policy to provide subsidy for fossil fuel generation and impose tariffs and other barriers on renewables. Even nuclear solutions are inhibited by this rate of demand growth since it takes so long to approve, plan and construct new nuclear reactors. The pace also creates potential delays. The IEA suggest that 20% of planned data centre builds will be delayed through backlogs in obtaining grid connections. This further encourages tech companies to go off grid with gas generation of their own.

Even if a data centre was fully supplied by wind or solar power, its presence and demand for those assets prevent other energy users accessing it. This is linked to ‘marginal costing’ where the wholesale price of electricity is set by the most expensive assets on the grid. This is usually gas as it the one that is turned on and off to balance the demand at the top of the energy stack. This leads to an interesting thought experiment.

Say on a sunny day, 90% of your electricity supply is generated by solar power, the rest through a mix, with gas providing the last 1% and setting the price. You decide to charge your EV believing that you are using 90% clean energy. If you use that charge for an essential journey, then that’s fine, you can enjoy that ethical glow. However if you decided to just go for a drive for the fun of it, with no purpose in mind, are you still 90% clean? The answer is no, you are 100% gas powered because if you had not charged your EV, someone else on the grid would have used those electrons and the gas plant would not have had to provide so much power. An A.I. tech company can claim it is using 100% clean energy, but if their users are just generating tripe or even ignoring the A.I. aspect of their interaction (as in a large proportion of internet searches), then they may as well have been burning ugly dirty coal.

How likely are these demand figures?

There is a risk attached to the level of confidence in the projected growth of A.I. and data centre peak demand. Work done by utility companies and energy providers in preparation for the demand could be wasted if the bubble bursts or technology evolves to make A.I. more efficient and less energy hungry.

Constellation consider the proposed demand to be a two or even three times overestimate, simply because multiple sites are being worked up for what will eventually be a single site decision. Millions are being spent working up site plans which gives local contractors and the supply chain confidence they will go ahead, but in reality the eventual spend is so astronomical, that a few tens of millions working up multiple sites is nothing in the grand scheme of things.

There could also be breaks applied to the presumed adoption through legal, security and social resistance. Companies may not adopt the technology as quickly as predicted as it often requires staff re-skilling and potential issues with labour relations. High licensing costs to cover the huge investments being made may also provide a barrier.

Could the bubble burst? An analysis by David Cain, a partner at VC company Sequoia Capital did the maths last year4. He estimated that to justify the capital expenditure implied by Nvidia’s revenue pipeline, their customers (A.I. providers) would need to generate annual revenues of $600 billion. Near-term revenues could generate $100 billion leaving a $500 billion hole. Assuming the required revenue would come from a billion highly connected people in the world, each would have to spend $600 a year, either as direct payments to A.I. providers or indirect payments for A.I. integrated services used at home or at work (double my mobile phone tariff). Would you be prepared to pay that?

Maybe just charge the big users then. If just the top 100 million wealthiest people were charged, they or their employers would have to lay out $6,000 per year. This starts to restrict interest. Would you pay $500 a month for A.I. support? Actually it will be more now as Cain’s analysis was done nearly a year ago and Nvidia’s order book has only got bigger.

Until current levels of investment are justified, either capital markets will keep throwing money at A.I., or they will pause for thought. Experience from other bubbles suggests the ride ahead could be bumpy, slowing down demand and giving time for further emissions reduction in the electricity sector. Of course if the bubble bursts, so much capital has been invested in big tech that the resulting economic fall out would lead to a significant economic depression. That in itself would also lower emissions.

This information does not constitute financial advice!

Will future A.I. be as energy hungry?

Over the next decade, we can expect:

Smaller, more efficient models that rival large ones but use less energy and cost less to implement.

Specialised agents trained on domain-specific data (e.g. legal, medical, engineering) to provide expert-level support, replacing a proportion of the generative A.I. utilisation.

Autonomous systems capable of planning, reasoning, and acting with minimal human input, streamlining processes and leading to significant efficiency gains across the economy.

Ethical and regulatory frameworks to guide safe development, especially in areas like employment, privacy, and misinformation. It would be nice to think that energy and resource usage would be included in this, but it’s unlikely given the current political situation in the US.

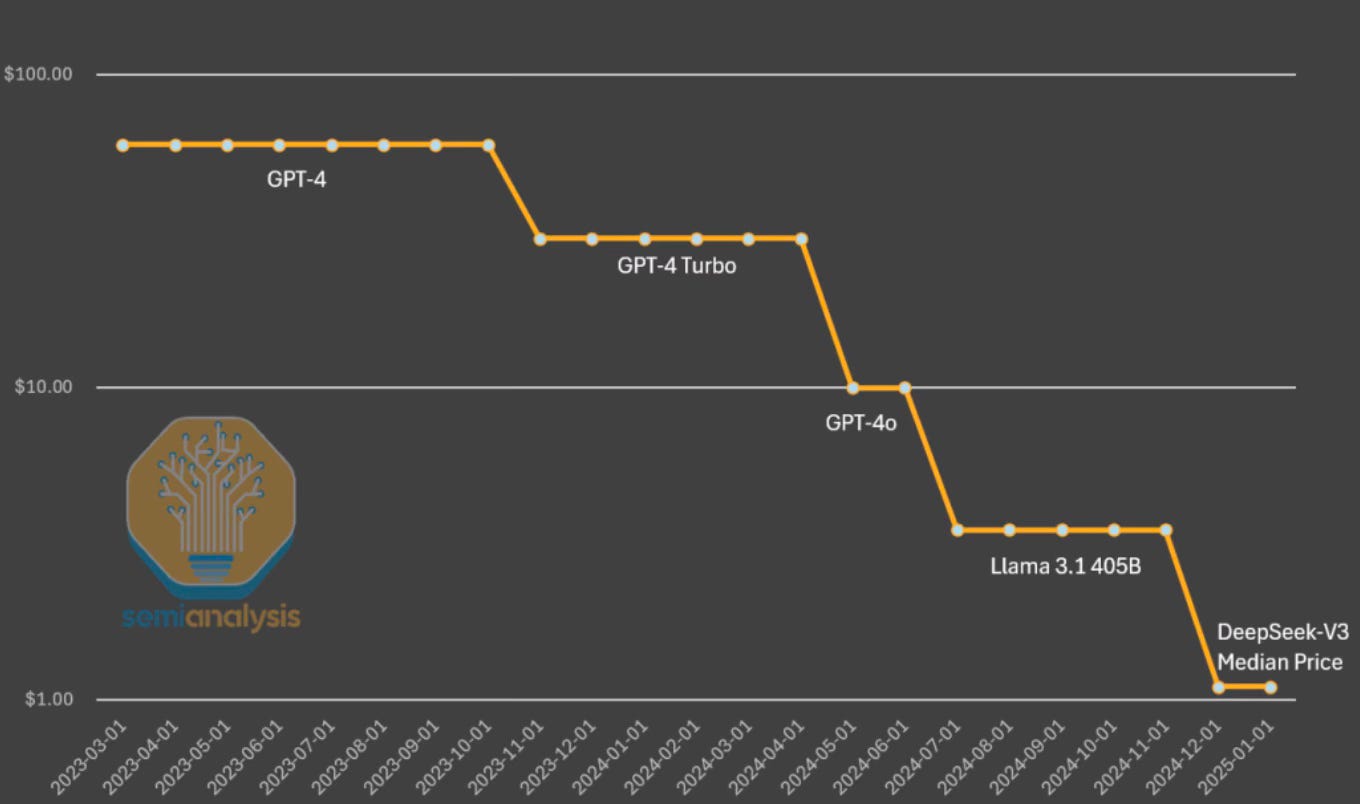

The Chinese DeepSeek R1 had a training cost of $294,000 which is substantially less than the tens of millions of dollars that rival models are thought to have cost. GPT-4 is estimated to have an energy cost of over $100 million to train. A recent peer reviewed Nature paper5 focuses on the technique that the DeepSeek used to train R1 to ‘reason’. The researchers applied an efficient and automated version of a ‘trial, error and reward’ process called reinforcement learning. In this, the model learns reasoning strategies, such as verifying its own working out, without being influenced by human ideas about how to do so. The strategy resulted in a minimum of a 50x reduction in power usage during training.

In addition, software performance and processor design improvements are progressing at incredible speeds. Every year the computational requirements are slashed by 4 to 10 times6, resulting in significant energy savings per model. So whilst corporate and geopolitical competition, the growing number of systems and post-training optimisation may drive increases in energy use, there are other factors reducing it substantially.

Hardware advances towards specialised A.I. chips with smaller and more efficient internals also lower power per computation. Google and Nvidia have reported 80-fold and 25-fold improvements in the energy performance of their new A.I. chips, respectively. Algorithmic optimisation such as knowledge distillation works by training a smaller A.I. with a more complex one, using much less energy. Processor run time optimisation can schedule tasks to avoid processor downtime and peaks and troughs in energy demand7.

There are efficiencies in data preparation as well. The largest models are already trained on all the data available. There is little point training bigger networks on the same data, so new large system training from scratch may diminish. Data quality is becoming more important that data quantity. When the data is properly filtered the quantity is reduced which makes the training faster and use less energy. Open source models that can be updated with target data rather than trained from scratch also significantly reduce energy usage in training.

Looking further ahead to when quantum computing advances to the point where it can be used to run A.I., energy requirements will evaporate. Quantum machines are anticipated to carry out a million times as many operations in the same time, and energy cost, as conventional processors. A.I. could be used to accelerate the development of quantum computing, bringing this transition closer.

A potential medium term decline in data centre power usage from reinforcement learning strategies, software and hardware evolution and ultimately quantum computing could even result in more inefficient fossil fuel assets being built over the next 5-10 years that are not needed or used. Over supply would see energy prices drop, so that investment in renewables could be hampered, even though they would have been cheaper in the long run. Conversely if data centres were built with renewable power supplies, those assets could then be used by others to accelerate the energy transition if data centre energy demand dropped.

Water usage

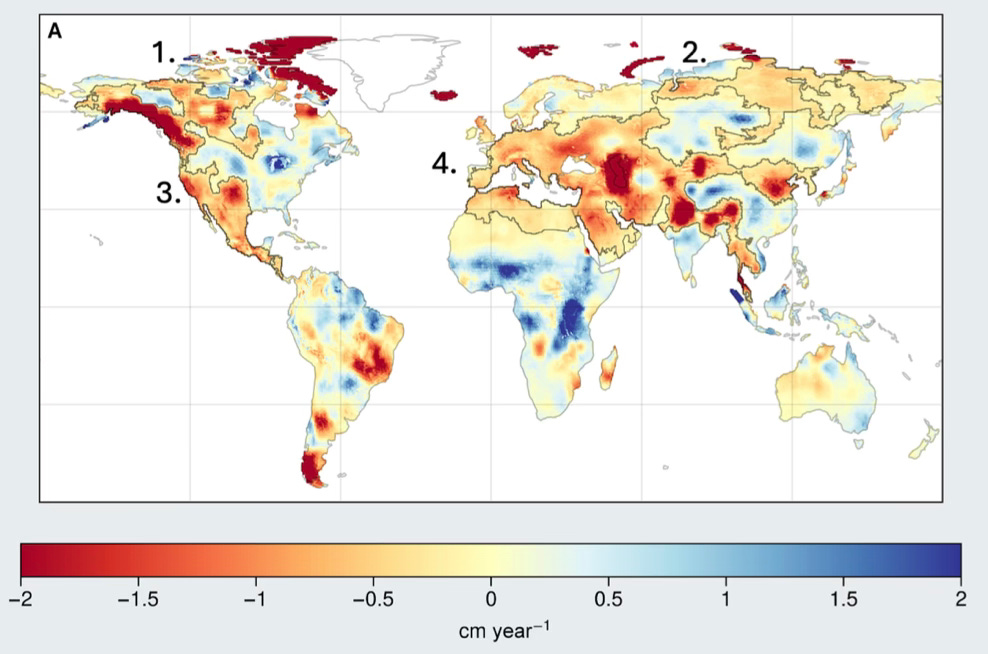

According to Joshua et al. the average data centre uses 1.7 litres of cooling water per kilowatt hour of energy consumed8. Freshwater is depleting rapidly in many areas of the world, including the west coast region of the US. Groundwater depletion is now driving more sea level rise than all the land based snow and glacier decline (apart from Greenland and Antarctica).

Using the 1.7 litre/kWh value, suggests freshwater usage in 2024 by data centres was 705 million tonnes. This would increase to 1,600 million tonnes by 2030 and potentially more than 2,000 million tonnes by 2035. If you prefer to think in terms of Olympic swimming pools, that equates to a current usage of 775 pools per day in 2024 increasing to 1,750 a day in 2030 and 2,250 a day by 2035.

In the US, water demand has actually declined in recent decades despite growths in both population and GDP. The main reasons for this are more efficient devices such as low flush toilets, shower heads and washing machines, but also the decline and offshoring of high water use manufacturing industries. Despite this decline in usage, water table levels, aquifer levels and river flow are all in steep decline, showing that even current demand is unsustainable. Adding huge water demand for data centres may not even be viable against this backdrop.

It’s not just the US which is losing groundwater, a recent paper shows that the world is losing freshwater at dangerous rates9. The satellite study shows dramatic losses since 2002 driven by a combination of climate change, unsustainable groundwater use and extreme droughts.

Global patterns of rainfall, evaporation and terrestrial water storage are shifting in response to climate change. The historically dry areas of the planet are becoming dryer while the historically wet areas are becoming wetter. This was predicted by climate models but the reality is more extreme and, more worryingly, out of balance.

75% of the world population across 101 countries are living in areas that are becoming dryer. As a result more use of pumped groundwater is being relied on, depleting reserves at an unsustainable rate. Siting data centres in areas not experiencing ground water shrinkage could be a challenge.

Electric cooling reduces water use, but at the cost of additional electricity requirements. In the long term, however this may be the preferred solution, especially in the western US, Europe and parts of China.

Efficiency Gains

There is another side to this story though. A.I. can bring a lot of benefits to other energy users, in tern making them much more efficient. Is there potential for A.I. to be a net positive in the energy transition and therefore help in the fight against climate change?

According to Ember, data centre growth ranks just 5th in the list of increasing demand for electricity by 2030. Industry, electric transportation, appliances and space heating are all predicted to create more growth than A.I.. This will drive the energy transition in many territories enabling the growth of renewables and in the process release a lot of countries from the grip of the petrostates.

Scaling A.I. applications across the energy system will also bring efficiency benefits. Predictive maintenance optimised by A.I. will increase reliability and with it reduce over-capacity requirements at the same time as optimising equipment lifetime. Autonomous operations will reduce costs while grid optimisation will ensure efficient running of the whole system tailored to minimising fossil fuel use.

The area of Smart Grids is gaining traction across the world10. Smaller local networks are optimised to use local generation and storage for predicted demand, all controlled by A.I. Systems called virtual power plants (VPP) that treat the local grid as a single utility, matching demand, supply and storage with usage patterns and weather forecasts, greatly increasing the efficiency but also making the most of renewable generation assets and so minimising emissions. Systems can even autonomously link to home appliances such as washing machines and fridges, scheduling their operation automatically. A smart fridge can be told to cool more when renewable energy is abundant, and turn down to smooth out demand peaks with no impact to, or even involvement of homeowners at all.

A.I. optimisation can also be applied to grid scale, home and EV battery management and smart charging. This could have benefits for battery longevity as well as minimising fossil fuel use on the grid. A.I. optimisation of second life batteries providing grid scale batteries from used EV components could also be improved to make full use of materials before recycling. Vehicle to Grid chargers and enabled EVs can push unused charge back into the home or grid during peak demand and be re-charged ready for the school run overnight, all controlled by A.I.

A recent UK funded project in the Orkney Islands11 used A.I. to predict wind power availability, messaging EV owners with optimum charging windows to reduce wind turbine curtailments on the islands. A.I. optimised building management systems can schedule heating systems to operate at the best times for energy mix and efficiency. A.I. is being used by one UK heat pump installer to optimise system design for each home, avoiding over-specifying the power requirements.

A.I. could also be used for automated permitting, speeding up the planning and installation times required for new sites. It could also be used in the R&D setting to optimise material discovery and all aspects of product and system design for greater efficiency.

There is no doubt that A.I. will also bring benefits to sectors like healthcare and reduce waste in other sectors, but Jevon’s Paradox also needs to be considered.

Whenever a new technology is introduced that increases efficiency, the overall spend doesn’t always drop, people just use it more. Computing power and Computational Fluid Dynamics is a good example. When an engineer gets a faster PC, they doesn’t run their old mesh models faster, they build meshes with more nodes that can run in the same time. With cloud computing, the sky is the limit and so models have become huge, solely limited by cost. The same is true with complex climate models. The result is always to use up any efficiency gains by increasing the compute available within the budget, not to shrink the budget. The plans for CMIP7 (Coupled Model Inter-comparison Project) are far grander than CMIP6 with higher spacial resolution and many more feedbacks and interconnected models involved.

In the long term, once the energy transition is fully underway and unstoppable, which might only be a few years away if current global trends continue, A.I. powered by solar and wind generation will be much less of a concern from the emissions perspective. A.I. is already reducing the marginal cost of information to the marginal cost of electricity. Taken together, A.I. and the growth of renewables will see the marginal cost of energy and information fall dramatically.

In the mean time there are potential benefits to running the utility side of A.I. in territories with high renewables and water availability. Companies may want to keep the training and development servers in their own countries, but once trained, systems could be deployed to minimise emissions and benefit from more sustainable water availability.

The negative side to this story is that A.I. has benefits to other more damaging sectors too12. It’s been reported that extensive use of A.I. within the fossil fuel sector has led to a 5% production boost through enhanced exploration and extraction uses. A.I. is also boosting the fast fashion industry by driving on-line adaptive and responsive advertising, increasing sales. Fast fashion is the most polluting industry sector after agriculture and construction. Self driving cars may also encourage people to travel more and further in their car rather than taking public transport.

Using A.I. within climate science

Earth system models (ESMs) are an essential tool for understanding the Earth’s climate and the consequences of climate change, which are crucial to the design of policies to address the climate crisis. Modelling the Earth is inherently complex. ESMs are among the most challenging applications that the high-performance computing industry has had to face, requiring the most powerful computers available, consuming large amounts of energy in computer power, and producing massive amounts of data in the process.

Different models are developed to deal with different aspects of the Earth’s system. These are then coupled together so that they can be run in parallel allowing, for example ocean surface changes to be connected with atmospheric effects and rainfall, for heat uptake to be linked to ocean current changes etc.

There have been six Coupled Model Inter-comparison Project (CMIP) cycles to date. CMIP6 are the models that were used by the IPCC to create the last set of climate assessment reports published in 2021. Plans are currently underway for CMIP7 which should inform the next rounds of IPCC reports towards the end of the decade.

The models are hugely complex, but still miss a lot of interconnections and feedbacks that might seem obvious, but were too complex or insufficiently accurately modelled at the time to warrant inclusion. For example Greenland ice sheet dynamics models are not included in CMIP6 sea level rise or ocean current change predictions, even though ocean water freshening is a critical aspect of the ocean system.

A recent study examined the energy and carbon costs of the CMIP6 campaign13. A total of 21 model projects were endorsed in CMIP6, which included 190 different experiments that were used to simulate 40 ,000 years and produced around 40 Petabytes of data in total. 1.1 billion core hours were used in total using almost 50 TJ of energy with estimated carbon emissions of 1,692 tonnes of CO2e.

A.I. in the form of deep learning neural networks is showing considerable promise in matching the performance of CMIP6 models allowing additional experiments and particular focusses to be analysed at a fraction of the cost. A.I. can be trained on model runs and then used to run experiments using different parameters or start conditions in a fraction of the time, and therefore cost.14

A.I. is also being used in near future extreme weather prediction. Google’s Deepmind system has been employed for the first time this year in the tracking and forecasting of Atlantic hurricanes. Hurricane Erin, a huge category 5 storm which tore through the Atlantic in August was its first real test. It outperformed the European and NOAA model ensembles in accurately predicting its path, which fortunately didn’t make landfall, although the storm surge and rip tides affected much of the US east coast15. Preliminary analysis by NOAA suggests that throughout the 2025 season the A.I. system was the top performing model for both track and intensity in both the Atlantic and Pacific basins. The model is being evaluated this season in partnership with the National Hurricane Center and gave NHC forecasters extra confidence to issue uncharacteristically aggressive forecasts in the days leading up to Hurricane Melissa, sounding the alarm in Jamaica and Cuba well in advance of the season’s strongest and most impactful hurricane16.

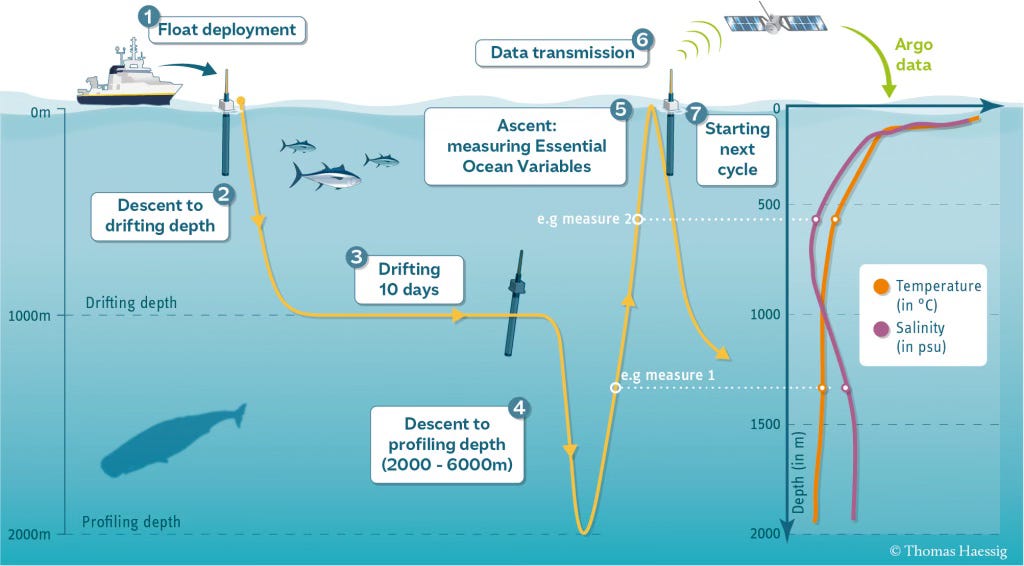

When it comes to data analysis A.I. has a great deal to offer in climate science. ARGO is a good example of how A.I. is being used to interpret it’s data. The ARGO programme is a 30 country collaborative effort to collect data from the oceans using autonomous floats. Over 2,000 of these floats are scattered around the world. Each float (costing up to $185,000) runs continual missions after being launched from a ship. It first sinks to a depth of 1,000m then drifts on the currents for 10 days. It then descends further to between 2,000 and 6,000m before ascending again taking measurements of the water column. These typically include temperature and salinity profiles but can also include biogeochemistry, oxygen saturation, pH and nitrate levels. Once on the surface they relay the data by satellite before diving again for another run, repeating the process for up to 10 years. The fleet provides 13,000 profiles every month from all over the globe. The data is free to access and has been used in over 6,000 scientific publications and referred to or used in over 500 PhD theses.

The Atlantic Meridional Overturning Circulation (AMOC) is perhaps the most threatening tipping element that scientists are worried about. When it shuts down, it will radically change the climate of Western and Northern Europe as well as increasing sea levels by up to meter along the US east coast. ARGO floats provide valuable data on current flows but are sparse in time and location. A recent A.I. study used the data to effectively fill in the gaps and provide a cost effective measure of AMOC strength17. The team were then able to use the trained A.I. to understand the relevance of specific measures in affecting AMOC weakening providing focus for further missions and analysis.

At an even bigger scale, the Enterprise Neurosystem project18 is bringing global data together into a universal A.I. for the climate. It is integrating data sources from bee hive monitoring to satellite data and from rain gauges to species counts from remote cameras. In addition to the global system, country and state wide systems can help planners detect and prepare for climate events and future trends. This project is running in conjunction with the UNFCCC as well as many international government agencies. Early systems are targeting global south countries such as Uganda and Tanzania in addition to states such as California. The work includes standards for inter-modal communication and interaction.

The next decade is critical in climate science. Changes are accelerating and trends from the past are becoming less reliable as indicators of the future. A.I. has a huge amount to offer both in terms of data analysis but also in modelling and prediction. To be successful however there are some key governance challenges that must be considered and enacted as a priority.

There is a growing need for standardisation within the field, even at an international level. This will help ensure reliability, comparability and ethical development of A.I. climate models and solutions across the globe.

The first step towards this goal is data harmonisation. Data formats and protocols need to be established to ensure seamless exchange and integration of datasets from different sources. Researchers worldwide need to have free and easy access to data, especially in developing nations who need data for resilience planning. Data quality and validation procedures are required addressing issues and providing confidence in data accuracy, completeness and consistency. Data sharing and free access is also highly important.

A.I. climate models need to be transparent and accessible for validation. Black box models create barriers to trust and provide opportunities for vested interest and climate deniers to create doubt and mistrust in their outputs. Work could include standardised documentation, benchmarking protocols and open access for repeatability.

There are also ethical and societal considerations including bias detection and mitigation, accountable and responsible use practices and inclusivity.

There is an urgent need for international standards to be developed ahead of the flood tide of uses and applications to ensure best practice as the technology rapidly progresses.19

Using A.I. within climate adaptation

A.I. is revolutionising climate adaptation by enhancing prediction, monitoring, and sustainable decision-making.

It is transforming how we understand and respond to environmental challenges and extreme weather. A.I.-powered climate and weather forecasting models can process vast datasets—from satellite imagery to ocean temperatures—to predict extreme weather events like floods, droughts, and hurricanes with unprecedented accuracy. This information can be communicated rapidly those those that need it most in real time, allowing communities to prepare and adapt more effectively. This is especially valuable in developing regions which can not easily afford the scale of modern weather forecasting services.

In agriculture, A.I. helps optimise irrigation, reduce fertiliser use, and monitor crop health, thereby lowering emissions and conserving resources. A.I. driven systems also track deforestation and carbon storage using satellite data, enabling governments and NGOs to protect vulnerable ecosystems and report more accurate information to balance carbon budgets and inform policymakers.

How can society balance these risks and rewards?

As with every new technology, there are costs as well as benefits. A.I. is potentially different in that there are so many applications affecting so many sectors that it is difficult to manage the overall risks.

Balancing the risks and benefits of A.I. over the next decade requires effective governance, inclusive design where the users and those impacted by A.I. systems have a say in how they are developed, and a much more rigorous ethical framework for developers, deployers, and users of A.I.

Transparence is important to allow the public to make informed decisions. Google’s recent disclosure that a median query of their A.I. systems uses just 0.34W-hours falls into this problem by potentially making the public think the energy use is trivial. Their use of median skews the result to the far lower end of the use and energy profile. If 60% of usage is in search results and 40% on Gemini chats, the median will be one of the low energy search usages, ignoring the high energy aspect of the whole picture and the real average cost, which will be much higher.

Even when costs are available, they rarely include the environmental costs or the impacts that it has on third parties totally unconnected with the application. Until a realistic carbon price is charged on energy generation, fossil fuel companies will receive their huge subsidy. (Currently $7 trillion a year or 6% of GDP, plus immunity from the deaths of 8 million people a year). Given the projected importance of A.I., it can’t be allowed to follow that model. True costs must be calculated, communicated and charged from the outset.

It’s probably true to say that A.I. use to optimise energy systems and grids will be beneficial and result in better energy efficiency as a result. But those benefits need to pay for the additional impacts being unleashed through less useful applications. Are the benefits of creating videos for fun worth the extinction of coral reefs or the flooding of people’s homes? When it’s 42ºC outside would you rather have energy for air conditioning or a chat with your favourite A.I. boyfriend?

The scale and timing of the A.I explosion is also of relevance when linked to climate change risks. The current acceleration in global warming rates and the trajectory over the next few decades is largely baked in since there is huge inertia within the climate and ocean systems. Even if emissions were stopped overnight, temperatures would still rise and probably exceed 2ºC. Without A.I. expansion, we are still heading for at least 2.5ºC by 2050 which will be a monumental challenge for global society to cope with. That challenge could be much harder without A.I. to help us.

The way forward

Ensuring A.I. is safe is a significant and multi-faceted challenge. A.I. Alignment needs to include these climate and environmental risks in addition to the other safety concerns.

Developers should be made aware of the environmental costs associated with the training and usage of their systems. Users too may benefit from being able to access energy and water costs when making model and supplier choices. Systems that make use of locally abundant clean energy and minimise water waste should be able to promote these benefits, potentially through an independent rating system. If any standards are being formulated for Ethical A.I., these need to include the carbon intensity and water usage requirements of systems meeting these standards.

More work is needed to track, document and articulate the costs and benefits of A.I. applications, including scope 1, 2 and 3 costs and relevant emissions.

A.I. is not just another incremental technology advance, it is set to revolutionise the way people live and work for the rest of the century. Decisions about governance, ethics and costs need to be made now in a manner suitable for the task. It is not just about profit, it’s about trying to minimise harm to people and the ecosystem we all ultimately depend on.

Part of the challenge is that the speed of development is outpacing legislation. International standards bodies are stepping in with guidelines or standards, including the iEEE. Two key aspects are required. Transparency – you should always know when you are interacting with A.I. and you should know when it is being ‘done to you’ – and accountability are absolutely key principles which the world needs to develop and strengthen if we are to enjoy the benefits of A.I. while managing the risks effectively. We need to ensure that those who develop or use A.I. to spread misinformation, to defraud and steal, or to harm others through deepfakes and other misuses are held to account for their actions. Right now, ‘Big Tech’ is trying to avoid regulation and legislation that would hold them accountable, and the debate rages over the balance between innovation and regulation. They have an available playbook used by Big Tobacco, Big Pharma and Big Oil. Society needs to ensure it is not regurgitated by Big Tech.

Educating the public is an essential requirement as we move into this new era in which A.I. disrupts our work and our lives. We need to empower citizens to understand and critically engage with A.I. tools. Education at every level is needed to help people how to use A.I. tools effectively, appropriately, economically and safely – and we must now start at the earliest ages possible. Our children need to be skilled users of A.I. if they are to prosper in the A.I.-powered world.

On the benefits side, A.I. should be harnessed to solve pressing global challenges—from climate modelling to healthcare access. Embedding ethical principles into every stage of development will ensure A.I. delivers on its investment.

Conclusions

Large Generative A.I. models are widely reported as being very energy hungry and this is indeed true, however a large amount of A.I. technology runs on much smaller, more efficient machines. They are often treated the same, but the distinction is important. For example to generate the A.I. image at the top of this article required a much smaller model than GPT-4. The energy usage was far less than if we’d drawn it with Photoshop on a laptop, and the AI used in last week’s article to analyse the 2024 jump in atmospheric CO220 trained in about 4 seconds on my aging iMac.

The large models and the massive data centres required for the scaling of A.I. and in particular for the massive Generative A.I. systems are however still a cause for huge concern, especially in the short term. They will increase energy demand substantially and delay the energy transition, pushing up emissions and costing the public more, both for their own energy and in climate impacts.

The net climate impact of A.I. will probably not be as severe as some are forecasting, but will still have a negative contribution. Efficiencies are progressing quickly and a lot of duplication is being included in forecasts. A.I.’s position on the hype curve is still in the growth stage and some market readjustment is inevitable which will lower the eventual impact.

A.I. has a great deal to offer in terms of advancing climate science and improved extreme weather forecasting which has the potential to optimise adaptation measures and significantly reduce death tolls, economic loss and damage. It also has the power to convince the voting pubic of the veracity of the threat, so that they start to take action and trigger the social tipping points of demanding change.

Standards and careful deployment are highly important to ensure systems are reliable, accurate and trustworthy. If we are careful, A.I. can be a positive driver for climate change mitigation and adaptation, but we need to be mindful and responsible in its use. By embedding ethical principles into every stage of development, we can ensure A.I. serves humanity and the environment, not just profit, and becomes a trusted partner in shaping a liveable future.

2050 is less than 25 years away!

Climate models tend to go out to 2100 allowing most scenarios and reports to tell us what it might be like by the end of the century, but less so in between. Temperatures will be X, sea level rise will be Y, but I, along with most of you, will be dead by then, so who really cares? For many people, there’s no point in making swe…

Liebreich, Generative A.I. - The power and the glory, BloombergNEF, December 24 2024, https://about.bnef.com/insights/clean-energy/liebreich-generative-ai-the-power-and-the-glory/

Guo, D., Yang, D., Zhang, H. et al. DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning. Nature 645, 633–638 (2025). https://doi.org/10.1038/s41586-025-09422-z

Christian Bogmans, Patricia Gomez-Gonzalez, Ganchimeg Ganpurev, Giovanni Melina, Andrea Pescatori, and Sneha D Thube. “Power Hungry: How A.I. Will Drive Energy Demand”, IMF Working Papers 2025, 081 (2025), accessed October 8, 2025, https://doi.org/10.5089/9798229007207.001

The Oxford Institute for Energy Forum, Artificial intelligence and its implications for electricity systems, May 2025 Issue 145, https://www.oxfordenergy.org/publications/artificial-intelligence-and-its-implications-for-electricity-systems-issue-145/

Joshua, Chidiebere & Marvellous, Ayodele & Matthew, Bamidele & Pezzè, Mauro & Abrahão, Silvia & Penzenstadler, Birgit & Mandal, Ashis & Nadim, Md & Schultz, Ulrik. (2025). Sustainable AI: Measuring and Reducing Carbon Footprint in Model Training and Deployment. EAI Endorsed Transactions on Tourism, Technology and Intelligence.

Hrishikesh A. Chandanpurkar et al. ,Unprecedented continental drying, shrinking freshwater availability, and increasing land contributions to sea level rise. Sci. Adv.11,eadx0298, 2025. DOI:10.1126/sciadv.adx0298

Rajaperumal, T.A., Columbus, C.C. Transforming the electrical grid: the role of A.I. in advancing smart, sustgainable, and secure energy systems. Energy Inform 8, 51 (2025). https://doi.org/10.1186/s42162-024-00461-w

Reflex Orkney Project - https://www.reflexorkney.co.uk

https://www.climatechange.ai/

Acosta, M. C.et al. The computational and energy cost of simulation and storage for climate science: lessons from CMIP6, Geosci. Model Dev., 17, 3081–3098, https://doi.org/10.5194/gmd-17-3081-2024, 2024.

Cresswell‐Clay, N.,et al. (2025). A deep learning Earth system model for efficient simulation of the observed climate. AGU Advances, 6, e2025AV001706. https://doi. org/10.1029/2025AV001706

Wölker, Y., Rath, W., Renz, M., and Biastoch, A.: Estimating the AMOC from Argo Profiles with Machine Learning Trained on Ocean Simulations, EGUsphere [preprint], https://doi.org/10.5194/egusphere-2025-2782, 2025.

https://www.enterpriseneurosystem.org/

Sustainability Directory, International Standards for A.I. Climate Models, 15th March 2025, https://prism.sustGainability-directory.com/scenario/international-standards-for-ai-climate-models/

Are Earth’s natural carbon sinks collapsing?

Continuous measurements of the carbon dioxide level in the atmosphere have been carried out since 1958 at the Mauna Loa Observatory on the island of Hawaii, and more recently from other stations around the world. The famous Keeling Curve shown below is named after Charles David Keeling who pioneered the work.

HI Tom , I think I commented on this before but many colder countries could directly benefit from this if the heated water is passed into the residential system. You state that 40% of electrical costs are in cooling and water as we know is an issue globally but if this heated and extracted product is then redistributed for a secondary use in cities and industry then there should be substantial flow on benefits to the consumers. Many high latitude countries need insulated and heated water systems and need deep burial below the frost zone just to remain viable, then for most domestic applications the water is heated to 50-60 degrees for household use. Much of this water could be coupled with a data center to minimize the waste. The simple notion that if water is provided to the household 5-10 degrees warmer and at lower processing costs should see vast benefits across all respective communities.

Let's see what happens